The Gaia Cluster

Overview

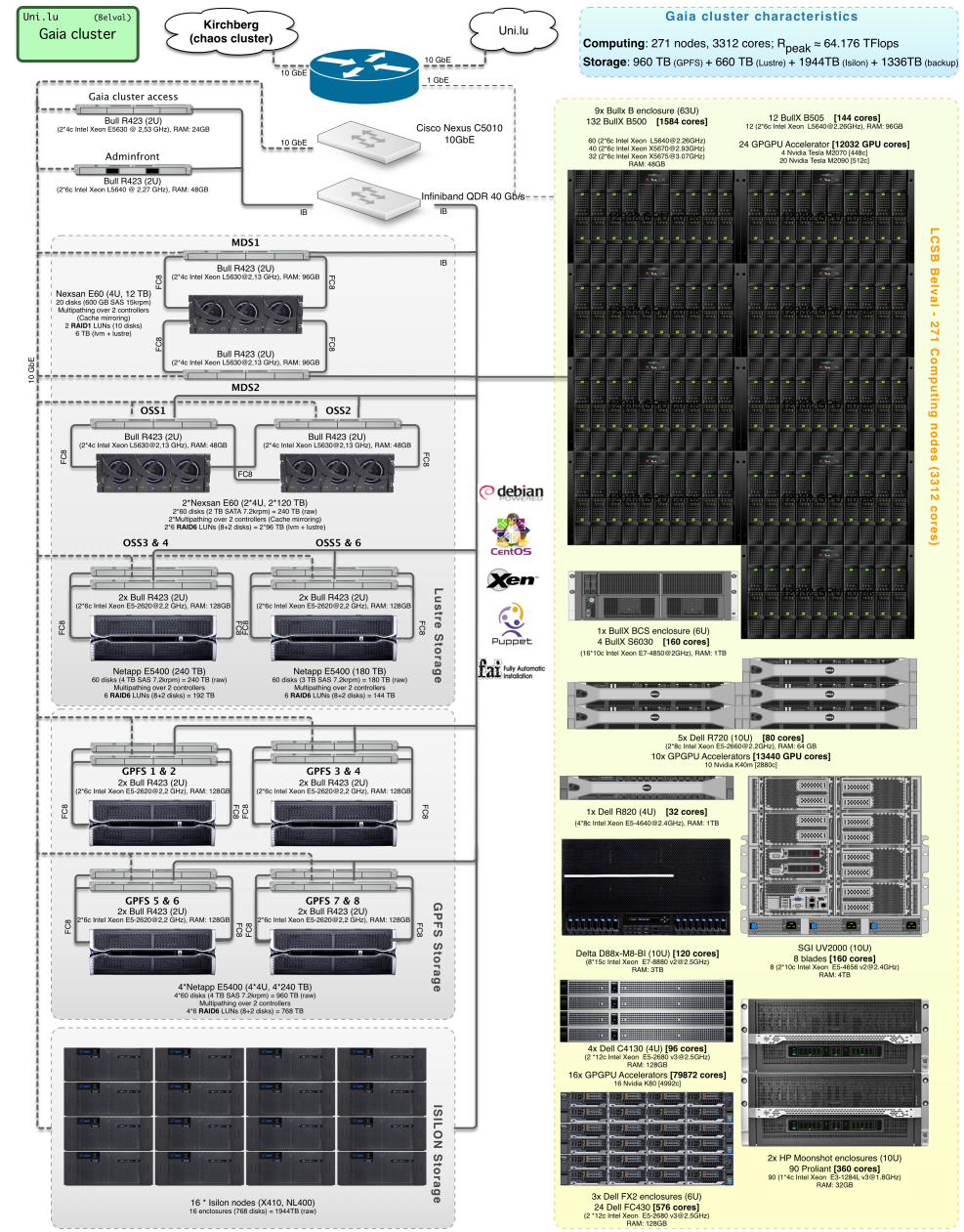

The cluster is organized as follows (click to enlarge):

Computing Capacity

The cluster is composed of the following computing elements:

In addition to the above list, since 2013 we are testing cutting-edge high-density computing enclosures based on low-power ARM Cortex A9 processors (4C, 1.1GHz). The 2 Boston Viridis enclosures we are experimenting with feature 96 ARM-Cortex A9 processors (thus offering a total of 384 cores). Access to these special nodes is available on request.

As for the Chaos cluster, the computing nodes are quite heterogeneous yet they share the same processor architecture (Intel 64 bits) meaning that a code compiled on one of the nodes could work on all the others, unless it uses special features such as AVX commandset etc.

- Haswell processors (

gaia-[155-184], moonshot[1,2]-[1-45]nodes) carry on 16 DP ops/cycle and support AVX2/FMA3. - Sandy Bridge processors

(

gaia-[74-79]nodes) and Ivy Bridge processors (gaia-[80,81]) carry on 8 DP ops/cycle and support the AVX command set.

The previous generations of processors (Westmere) only support 4 ops/cycle

GPGPU accelerators

The following GPGPU accelerators are currently available on the Gaia cluster:

| Node | Model | #nodes | #GPU board | #GPU Cores | RPeak |

|---|---|---|---|---|---|

| gaia-[61-62] | NVidia Tesla M2070 | 2 | 2 / node | 448c / board = 1792c | 2.06 TFlops |

| gaia-[63-72] | NVidia Tesla M2090 | 10 | 2 / node | 512c / board = 10240c | 13.3 TFlops |

| gaia-[75-79] | NVidia Tesla K40m | 5 | 2 / node | 2880c/ board = 28800c | 14.3 TFlops |

| gaia-[179-182] | NVidia Tesla K80 | 4 | 4 / node | 4992c/ board = 79872c | 46.56 TFlops |

| Total: | 21 | 50 | 120704 | 76.22 TFlops |

More details in the accelerators section.

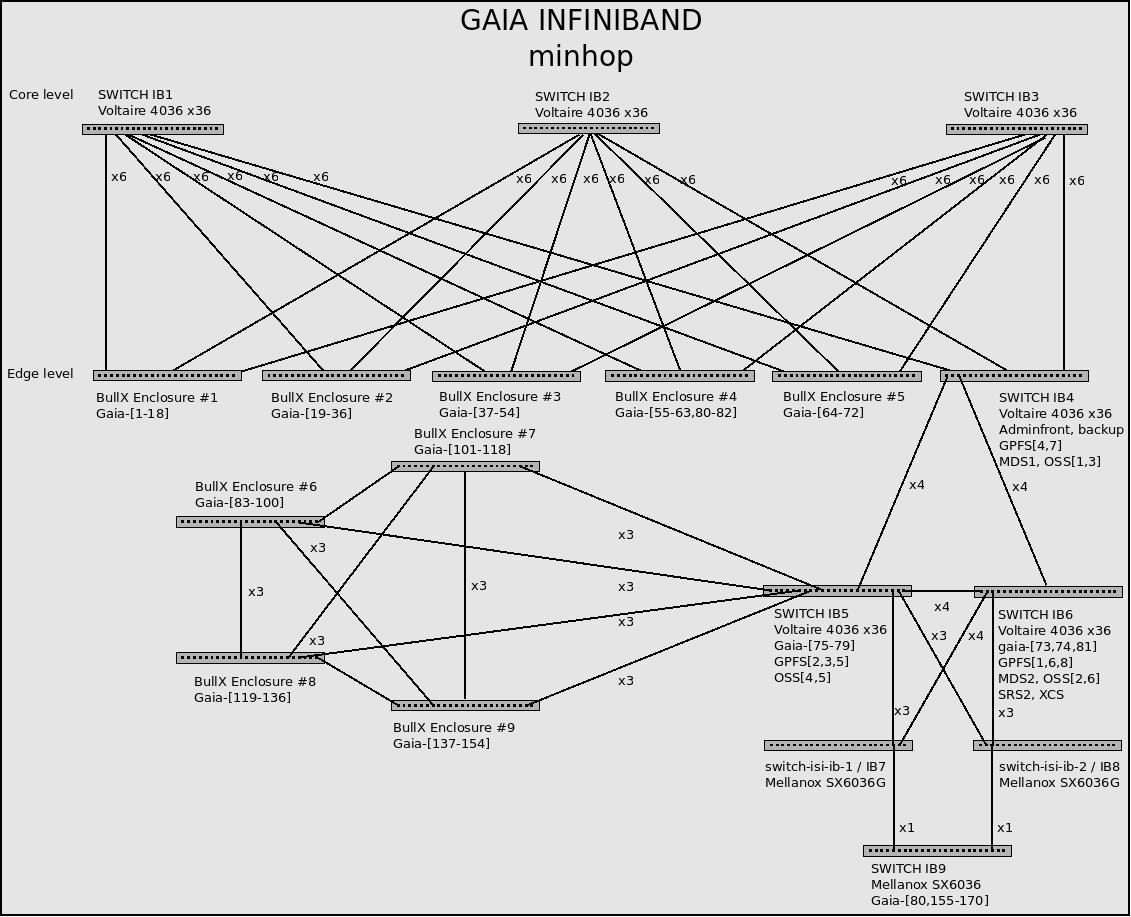

Interconnect

The interconnect is composed of an Infiniband QDR (40Gb/s) network, splitted in 2 pools, configured in a minhop topology.

The following schema describes the topology of the Gaia Infiniband Network.

Additionally, the cluster is connected to the infrastructure of the University using 10Gb Ethernet. A third 1Gb Ethernet network is also used on the cluster, mainly for services and administration purposes.

moonshot[1-2]-[1..45] nodes do not support Infiniband, they are connected to Gaia’s IB QDR network through 10GbE to Infiniband gateways.

Storage / Cluster File System

The cluster relies on 4 types of Distributed/Parallel File Systems to deliver high-performant Data storage at a BigData scale (i.e TB, excluding backup).

| FileSystem | Usage | #encl | #disk | Raw Capacity [TB] | Max I/O Bandwidth |

|---|---|---|---|---|---|

| XFS | Backup | 2 | 120 | Read: 1.5 GB/s / Write: 750 GB/s | |

| GPFS | Home/Work | 4 | 240 | Read: 7 GiB/s / Write: 6.5 GiB/s | |

| Lustre | Scratch | 5 | 260 | Read: 3 GiB/s / Write: 1.5 GiB/s | |

| Isilon OneFS | Projects | 29 | 1044 | n/a | |

| Total: | 43 | 1798 | TB (excl. backup) |

- Lustre: 477 TB

- GPFS: 700 TB

- Isilon: 3188 TB

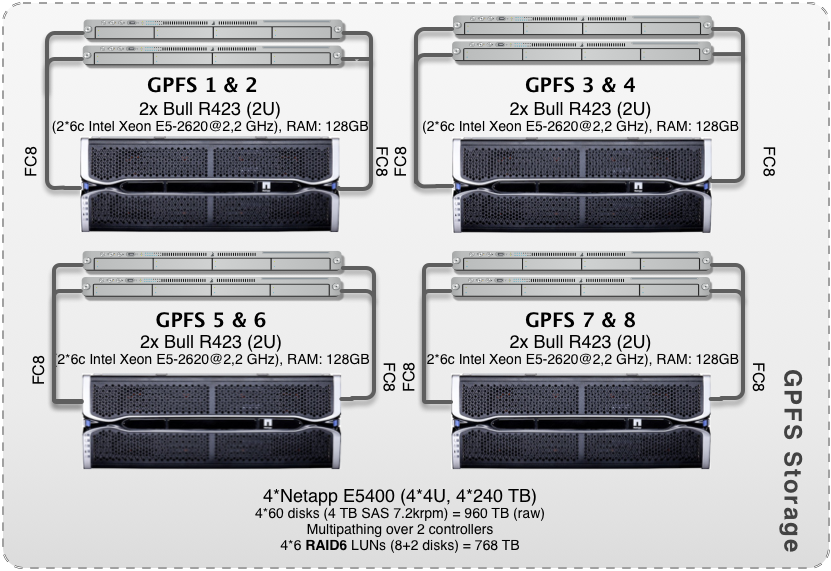

GPFS storage

In terms of storage, a dedicated GPFS system is responsible for sharing specific folders (most importantly, users home directories) across the nodes of the clusters.

This system is composed of 8 servers and 4 Netapp E5400 disk enclosures, containing a total of 240 disks (4TB SATA 7.2k rpm). The raw capacity is 960TB, and is split in 24 x raid 6 of 10 disks (8+2).

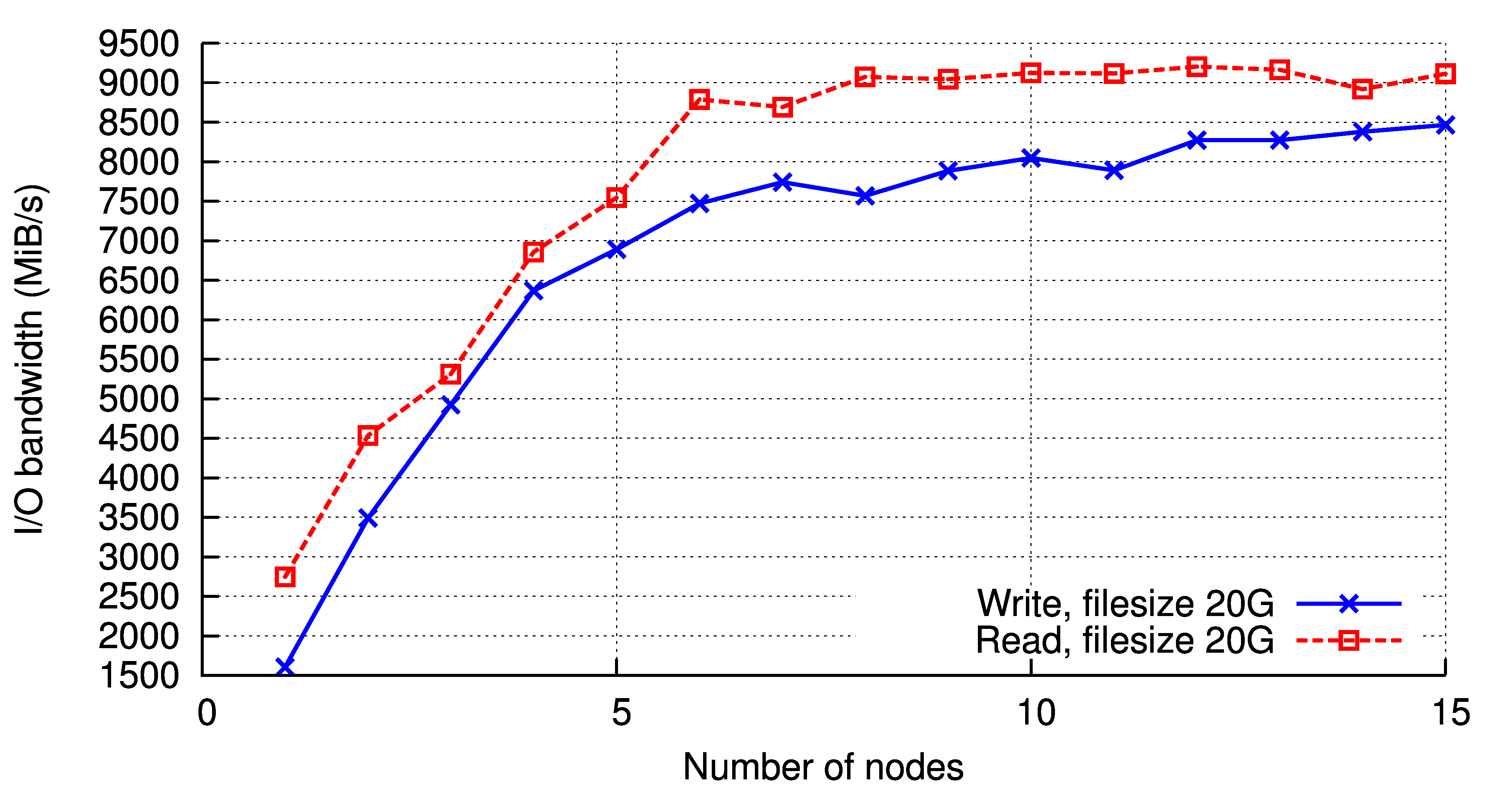

A benchmark of the GPFS storage on growing number of concurrent nodes have been performed. The results are displayed below:

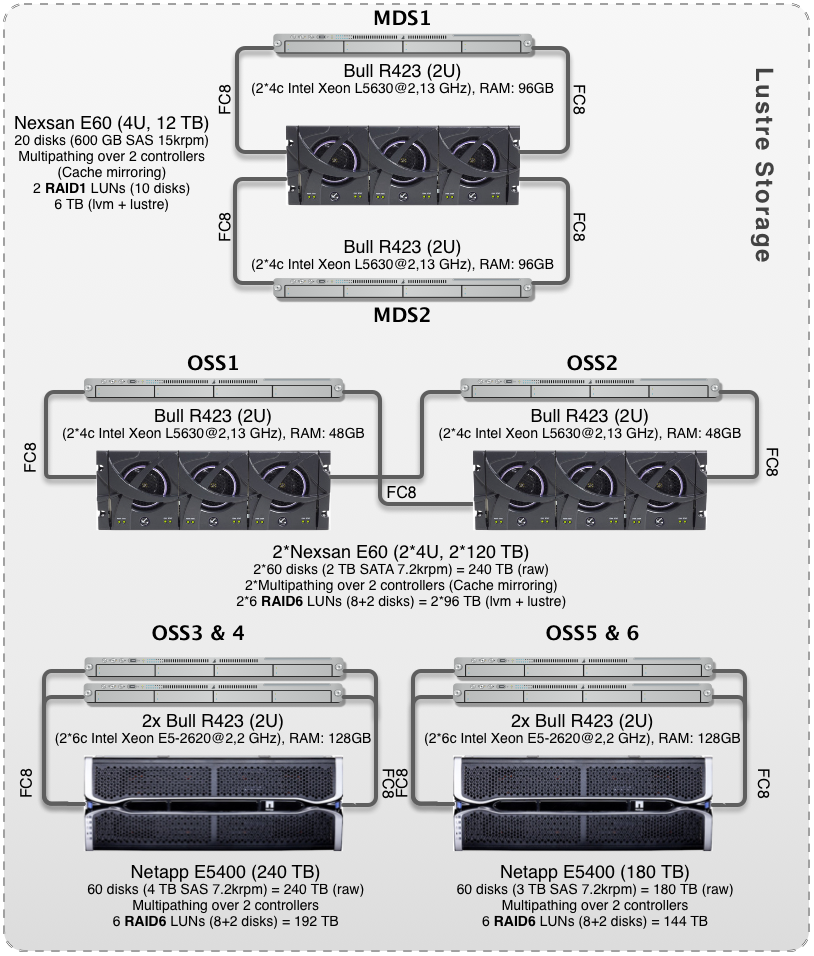

Lustre storage

We also provide a Lustre storage space (2 MDS servers, 6 OSS servers, 3 Nexsan

E60 bays & 2 netapp E5400), which is used as $SCRATCH directory.

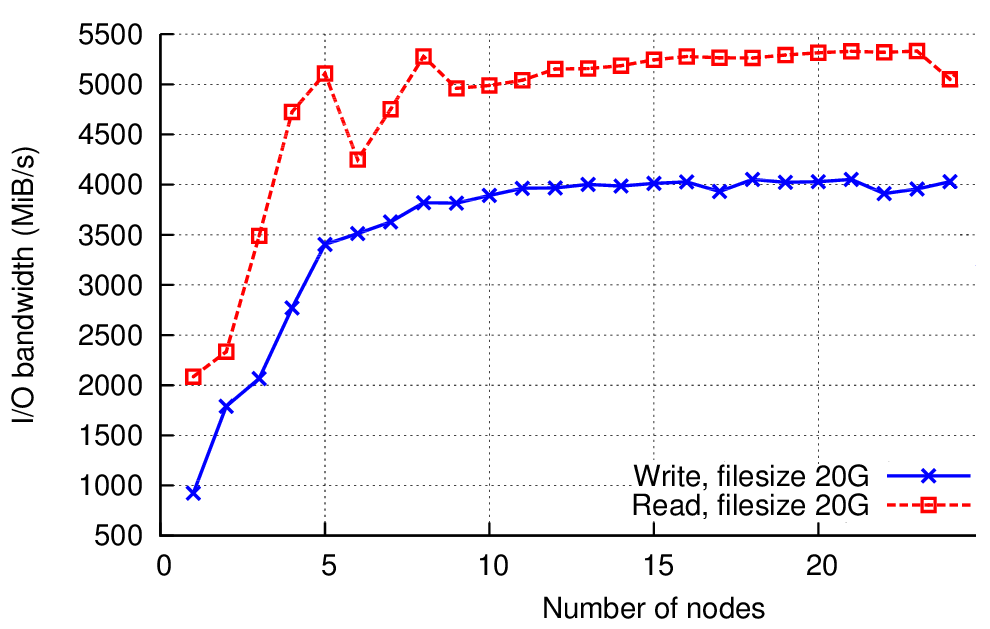

A benchmark of the Lustre storage on growing number of concurrent nodes have been performed. The results are displayed below:

Isilon / OneFS

In 2014, the SIU, the UL HPC and the LCSB join their forces (and their funding) to acquire a scalable and modular NAS solution able to sustain the need for an internal big data storage, i.e. provides space for centralized data and backups of all devices used by the UL staff and all research-related data, including the one proceed on the UL HPC platform.

At the end of a public call for tender released in 2014, the EMC Isilon system was finally selected with an effective deployment in 2015. It is physically hosted in the new CDC (Centre de Calcul) server room in the Maison du Savoir. Composed by 16 enclosures featuring the OneFS File System, it currently offers an effective capacity of 1.851 PB.

Local storage

All the nodes provide SSD disks, therefore, you can write in /tmp and get

very honest performance in term of I/Os and throughput.

Backup: NFS and GlusterFS

We have recycled all NFS-based storage (that used to host the user and project data) as part of our backup pool. In addition, a GlusterFS based system was deployed on Certon enclosures.

On total, the cumulative storage capacity of the storage area is TB.

I/O Performance

We evaluated the performance of our storage systems:

- Lustre Performance

- GPFS Performance

- Retired NFS storage server read / write

History

The Gaia cluster exists since late 2011 to serve the growing computing needs of the University of Luxembourg.

The platform has evolved since 2011 as follows:

-

November 2011: Initialization of the cluster composed of:

- gaia-[1-60], Bullx B500, 60 nodes, 720 cores, 1440 GB RAM

- gaia-[61-62], Bullx B505 (feat. 2 Tesla M2070), 2 nodes, 24 cores, 48 GB RAM

- 1GB Ethernet network everywhere, 10GB Ethernet network for servers, and Infiniband QDR 40Gb/s network

- NFS Storage, 240TB, xfs

- Lustre Storage, 240TB, lustre (1.5GB/s write, 2.7 GB/s read)

- Adminfront server

- 2012: Additional computing nodes

- gaia-[63-72], Bullx B505 (feat. 2 Tesla M2090), 2 nodes, 24 cores, 48 GB RAM

- gaia-73, Bullx BCS feat. 4 BullX S6030, “1” node, 160 cores, 1TB RAM

- gaia-74, Dell R820, 1 node, 32 cores, 1TB RAM

- 2013:

- Memory upgrade on gaia-[1-60] (48GB), and gaia-[71-72] (96GB)

- gaia-[75-79], Dell R720 (feat 1 Tesla K20m), 5 nodes, 80 cores, 320 GB RAM

- gaia-[83-154], Bullx B500, 72 nodes, 864 cores, 3456 GB RAM

- New storage server, 60 disks (4TB)

- 2014:

- gaia-80, Delta D88x-M8-BI, 120 cores, 3TB RAM

- gaia-81, SGI UV-2000, 160 cores, 4TB RAM

- 2015:

- Migration from NFS to the new GPFS filesystem (720TB)

- moonshot1-[1..45] & moonshot2-[1..45], HP Moonshot m710, 90 nodes, 360 cores, 2.88 TB of RAM

- gaia-[155..178], Dell FC430, 24 nodes, 576 cores, 3TB of RAM

- 2016:

- gaia-[179..182], Dell C4130 (feat. 4 Tesla K80), 4 nodes, 96 cores, 512 GB RAM

- GPU upgrades on gaia-[75-79] to 2 Tesla K40m